[ad_1]

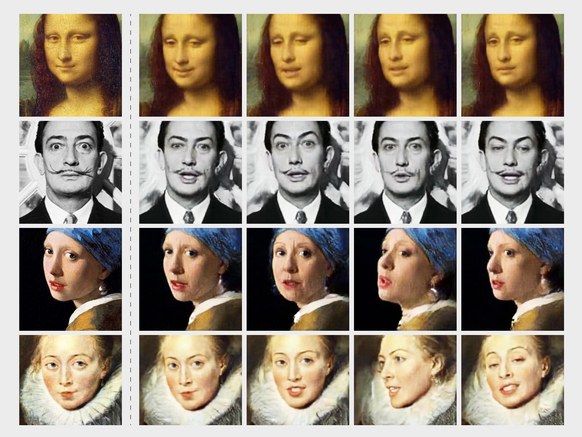

Deepfakes generated from a single image. The technique sparked concerns that high-quality fakes are coming for the masses. But don’t get too worried, yet. (credit: Egor Zakharov, Aliaksandra Shysheya, Egor Burkov, Victor Lempitsky)

Last week, Mona Lisa smiled. A big, wide smile, followed by what appeared to be a laugh and the silent mouthing of words that could only be an answer to the mystery that had beguiled her viewers for centuries.

A great many people were unnerved.

Mona’s “living portrait,” along with likenesses of Marilyn Monroe, Salvador Dali, and others, demonstrated the latest technology in deepfakes—seemingly realistic video or audio generated using machine learning. Developed by researchers at Samsung’s AI lab in Moscow, the portraits display a new method to create credible videos from a single image. With just a few photographs of real faces, the results improve dramatically, producing what the authors describe as “photorealistic talking heads.” The researchers (creepily) call the result “puppeteering,” a reference to how invisible strings seem to manipulate the targeted face. And yes, it could, in theory, be used to animate your Facebook profile photo. But don’t freak out about having strings maliciously pulling your visage anytime soon.

Read 12 remaining paragraphs | Comments

[ad_2]

Source link

Related Posts

- Cox Internet now charges $15 extra for faster access to online game servers

- Comcast usage soars 34% to 200GB a month, pushing users closer to data cap

- After White House stop, Twitter CEO calls congresswoman about death threats

- The sim swap the US isn’t using

- Probable Russian Navy covert camera whale discovered by Norwegians